FLASH User's Guide

Version 1.6

January 2001

(ASC) Flash Center

University of Chicago

FLASH User's Guide

Version 1.6

January 2001

(ASC) Flash Center

University of Chicago

| Acknowledgments |

The FLASH Code Group is supported by the (ASC) Flash Center at the

University of Chicago under U. S. Department of Energy contract B341495.

Some of the test calculations described here were performed using the

Origin 2000 computer at Argonne National Laboratory and the (ASC) Blue

Mountain computer at Los Alamos National Laboratory.

The Center for Astrophysical Thermonuclear Flashes, or Flash Center, was founded at the University of Chicago in 1997 under contract to the United States Department of Energy as part of its Accelerated Strategic Computing Initiative ((ASC)). The goal of the Center is to solve several problems related to thermonuclear flashes on the surfaces of compact stars (neutron stars and white dwarfs), in particular X-ray bursts, Type Ia supernovae, and novae. To solve these problems requires the participants in the Center to develop new simulation tools capable of handling the extreme resolution and physical requirements imposed by conditions in these explosions, and to do so while making efficient use of the parallel supercomputers developed by the ASC project, the most powerful constructed to date.

The FLASH code (Fryxell et al. 2000) represents an important step along the road to this goal. FLASH is a modular, adaptive, parallel simulation code capable of handling general compressible flow problems in astrophysical environments. FLASH has been designed to allow users to configure initial and boundary conditions, change algorithms, and add new physical effects with minimal effort. It uses the PARAMESH library to manage a block-structured adaptive grid, placing resolution elements only where they are needed most. FLASH uses the Message-Passing Interface (MPI) library to achieve portability and scalability on a variety of different message-passing parallel computers.

This user's guide is designed to enable individuals unfamiliar with the FLASH code to quickly get acquainted with its structure and to move beyond the simple test problems distributed with FLASH, customizing it to suit their own needs. Section 2 discusses how to quickly get started with FLASH, describing how to configure, build, and run the code with one of the included test problems, then examine the resulting output. Users familiar with the capabilities of FLASH who wish to quickly `get their feet wet' with the code should begin with this section.

Section 3 describes the equations and algorithms used by the physics modules distributed with FLASH. It assumes that the reader has some familiarity both with the basic physics involved and with numerical hydrodynamics methods. This familiarity is absolutely essential in using FLASH (or any other simulation code) to arrive at meaningful solutions to physical problems. The novice reader is directed to an introductory text, examples of which include

Section 4 describes in more detail the use of the configuration and analysis tools distributed with FLASH. Section 5 describes the different test problems distributed with FLASH. The sixth section describes the FLASH code structure at length and gives detailed instructions for extending FLASH's capabilities by adding new problem setups. This section also discusses the code modules included with FLASH 1.6 and describes how new solvers may be integrated into the code. Finally, the seventh section gives guidance on contacting the authors of FLASH.

This section describes how to quickly get up and running with FLASH, showing how to configure and build it to solve the Sedov explosion problem, how to run it, and how to examine the output using IDL. To begin, verify that you have the following:

FLASH has been tested on the following Unix-based platforms. In addition, it may work with others not listed (see section 6.5).

To begin, unpack the FLASH source code distribution. If you have a Unix tar file, type `tar xvf FLASHX.Y.tar' (without the quotes), where X.Y is the FLASH version number (for example, use FLASH1.61.tar and FLASH1.61/ for FLASH version 1.61). If you are working with a CVS source tree, use `cvs checkout FLASHX.Y' to obtain a personal copy of the tree. You may need to obtain permission from the local CVS administrator to do this. In either case you will create a directory called FLASHX.Y/. Type `cd FLASHX.Y' to enter this directory.

Next, configure the FLASH source tree for the Sedov explosion problem. Type

./setup sedov -defaultsThis configures FLASH for the sedov problem, using the default hydrodynamic solver, equation of state, and I/O format defined for this problem. For the purpose of this quick start example, we will use the default I/O format, HDF 4. The source tree is configured to create a two-dimensional code by default.

If your system is not one of the configured sites (type ls source/sites for a list), or for some reason hostname does not return the correct site name, setup will attempt to locate your operating system type (determined via uname) in source/sites/Prototypes/. If this also does not succeed, you will need to do one of two things. If hostname or uname did not succeed, you can specify your site name or OS type using the -site or -ostype option to setup. Type ./setup without any options to see the syntax. If you are working on a new system with no available prototype, create a directory under source/sites with the name of your system (mkdir source/sites/`hostname`) and copy the file Makefile.h from a similar prototype directory to it. Then run ./setup sedov -defaults.

The next step is to edit the file object/Makefile.h. This file contains all of the site-dependent and architecture-dependent parts of the Makefile. Verify that the compiler settings (FCOMP, CCOMP, and CPPCOMP) and the library paths (LIB) are correct for your system. No other changes should be necessary at this stage (later on you may wish to fiddle with the compiler optimization settings). Future versions of FLASH will make use of the GNU configure program to handle this step.

From the FLASH root directory (ie. the directory from which you ran setup), execute gmake. This will compile the FLASH code. If you should have problems and need to recompile, `gmake clean' will remove all object files from the object/ directory, leaving the source configuration intact; `gmake realclean' will remove all files and links from object/. After `gmake realclean,' a new invocation of setup is required before the code can be built.

Assuming compilation and linking were successful, you should now find an executable named flashX in the object/ directory, where X is the major version number (e.g., X = 1 for X.Y = 1.61). You may wish to check that this is the case.

FLASH expects to find a flat text file named flash.par in the directory from which it is run. This file sets the values of various runtime parameters which determine the behavior of FLASH. If it is not present, FLASH will use default values for the runtime parameters, which may or may not produce desirable results. Here we will create a simple flash.par which sets a few parameters and allows the rest to take on default values. With your text editor, create flash.par in the main FLASH directory with the contents of Figure 1.

# runtime parameters |

This instructs FLASH to use up to four levels of adaptive mesh refinement (AMR) and to name the output files appropriately. We will not be starting from a checkpoint file (this is the default, but here it is explicitly set for purposes of illustration). Output files are to be written every 0.005 time units, and we will integrate until t=0.02 or 1000 timesteps have been taken, whichever comes first. The ratio of specific heats for the gas (g) is taken to be 1.4, and all four boundaries of the two-dimensional grid have outflow (zero-gradient or Neumann) boundary conditions. Note the format of the file: each line is a comment (denoted by a hash mark, #), blank, or of the form variable = value. String values are enclosed in double quotes ("). Boolean values are indicated in the Fortran style, .true. or .false.

We are now ready to run FLASH. To run FLASH on N processors, type

mpirun -np N object/flashXremembering to replace N and X with the appropriate values. Some systems may require you to start MPI programs with a different command; use whichever command is appropriate to your system. The FLASH executable can take one command-line argument, the name of the runtime parameter file. The default parameter file name is flash.par.

You should see a number of lines of output indicating that FLASH is initializing the Sedov problem, listing the initial parameters, and giving the timestep chosen at each step. After the run is finished, in the current directory you should find several files:

setenv XFLASH_DIR "$PWD/tools/idl"If you get a message indicating that IDL_PATH is not defined, just enter

setenv IDL_PATH "${XFLASH_DIR}:$IDL_PATH"

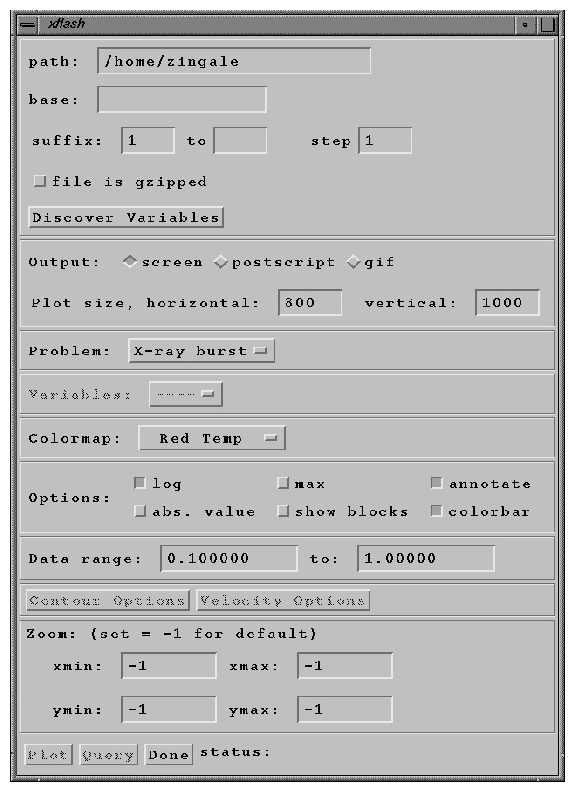

setenv IDL_PATH "$XFLASH_DIR"Now run IDL (idl) and enter xflash at the IDL> prompt. You should see a control panel widget as shown in Figure 2.

The path entry should be filled in for you with the current directory. Enter sedov_4_hdf_chk_ as the base filename and enter 4 as the suffix. (xflash can generate output for a number of consecutive files, but if you fill in only the beginning suffix, only one file is read.) Click the `Discover Variables' button to scan the file and generate the variable list. Choose the image format (screen, Postscript, GIF) and the problem type (in our case, Sedov). Selecting the problem type is only important for choosing default ranges for our plot; plots for other problems can be generated by ignoring this setting and overriding the default values for the data range and the coordinate ranges. Select the desired plotting variable and colormap. Under `Options,' select whether to plot the logarithm of the desired quantity, and select whether to plot the outlines of the AMR blocks. For very highly refined grids the block outlines can obscure the data, but they are useful for verifying that FLASH is putting resolution elements where they are needed. Finally, click `Velocity Options' to overlay the velocity field. The `xskip' and `yskip' parameters enable you plot only a fraction of the vectors so that they do not obscure the background plot.

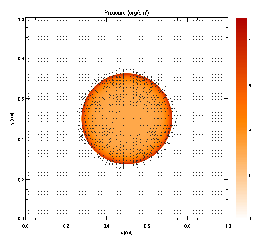

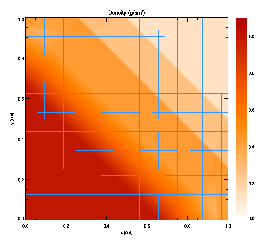

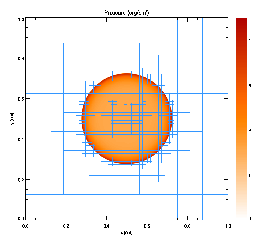

When the control panel settings are to your satisfaction, click the `Plot' button to generate the plot. For Postscript and GIF output, a file is created in the current directory. The result should look something like Figure 3, although this figure was generated from a run with eight levels of refinement rather than the four used in the quick start example run. With fewer levels of refinement the Cartesian grid causes the explosion to appear somewhat diamond-shaped.

FLASH is intended to be customized by the user to work with interesting initial and boundary conditions. In the following sections we will cover in more detail the algorithms and structure of FLASH and the sample problems and tools distributed with it.

FLASH provides a general simulation framework capable of incorporating several different types of physics module (e.g., hydrodynamics on structured or unstructured meshes, N-body solvers, reactive source terms, etc.) and initial or boundary conditions. However, it has been built to solve a particular class of problems, namely reactive, compressible flows in astrophysical environments. Thus, FLASH 1.x is distributed with a particular set of algorithms to handle compressible hydrodynamics on a block-structured adaptive mesh, together with an appropriate nuclear equation of state and nuclear reaction network. We treat each of these elements in turn.

The hydrodynamic module in FLASH 1.x is based on the PROMETHEUS code (Fryxell, Müller, and Arnett 1989). This code solves Euler's equations for compressible gas dynamics in one, two, or three spatial dimensions. These equations can be written in conservative form as

| ||||||||||||||||||||||||

| (4) |

| (5) |

For reactive flows, a separate advection equation must be solved for each chemical or nuclear species:

| (6) |

The equations are solved using a modified version of the Piecewise-Parabolic Method (PPM), which is described in detail in Woodward and Colella (1984) and Colella and Woodward (1984). PPM is a higher-order version of the method developed by Godunov (1959). Godunov's method uses a finite-volume spatial discretization of the Euler equations together with an explicit forward time difference. Time-advanced fluxes at cell boundaries are computed using the analytic solution to Riemann's shock tube problem at each boundary. Initial conditions for each Riemann problem are determined by assuming the nonadvanced solution to be piecewise constant in each cell. Using the Riemann solution has the effect of introducing explicit nonlinearity into the difference equations and permits the calculation of sharp shock fronts and contact discontinuities without introducing significant nonphysical oscillations into the flow. Since the value of each variable in each cell is assumed to be constant, Godunov's method is limited to first-order accuracy in both space and time.

PPM improves on Godunov's method by representing the flow variables with piecewise parabolic functions. It also uses a monotonicity constraint rather than artificial viscosity to control oscillations near discontinuities, a feature shared with the MUSCL scheme of van Leer (1979). Although this could lead to a method which is accurate to third order, PPM is formally accurate only to second order in both space and time, as a fully third-order scheme proved not to be cost-effective. Nevertheless, PPM is considerably more accurate and efficient than most formally second-order algorithms.

PPM is particularly well-suited to flows involving discontinuities, such as shocks and contact discontinuities. The method also performs extremely well for smooth flows, although other schemes which do not perform the extra work necessary for the treatment of discontinuities might be more efficient in these cases. The high resolution and accuracy of PPM are obtained by the explicit nonlinearity of the scheme and through the use of intelligent dissipation algorithms, such as monotonicity enforcement, contact steepening, and interpolant flattening. These algorithms are described in detail by Colella and Woodward (1984).

A complete description of PPM is beyond the scope of this user's guide. However, for comparison with other codes, we note that the implementation of PPM in FLASH 1.x uses the direct Eulerian formulation of PPM and the technique for allowing nonideal equations of state described by Colella and Glaz (1985). For multidimensional problems, FLASH 1.x uses second-order operator splitting (Strang 1968). FLASH also implements PPM on a block-structured adaptive mesh, which is described in the following section.

We have used a package known as PARAMESH (MacNeice et al. 1999) for the parallelization and adaptive mesh refinement (AMR) portion of FLASH. PARAMESH consists of a suite of subroutines which handle refinement/derefinement, distribution of work to processors, guard cell filling, and flux conservation. In this section we briefly describe this package and the ways in which it has been modified for use with FLASH.

PARAMESH uses a block-structured adaptive mesh refinement scheme similar to others in the literature (e.g., Parashar 1999; Berger & Oliger 1984; Berger & Colella 1989; DeZeeuw & Powell 1993) as well as to schemes which refine on an individual cell basis (Khokhlov 1997). In block-structured AMR, the fundamental data structure is a block of uniform cells arranged in a logically Cartesian fashion. Each cell can be specified using a block identifier (processor number and local block number) and a coordinate triple (i,j,k), where i=1¼nxb, j=1¼nyb, and k=1¼nzb. The complete computational grid consists of a collection of blocks with different physical cell sizes, related to each other in a hierarchical fashion using a tree data structure. The blocks at the root of the tree have the largest cells, while their children have smaller cells and are said to be refined. Two rules govern the establishment of refined child blocks in PARAMESH. First, the cells of a refined child block must be one-half as large as those of its parent block. Second, a block's children must be nested; that is, the child blocks must fit within their parent block and cannot overlap one another, and the complete set of children of a block must fill its volume. Thus, in d dimensions a given block has either zero or 2d children.

Each block contains nxb×nyb×nzb interior cells and a set of guard cells. The guard cells contain boundary information needed to update the interior cells. These can be obtained from physically neighboring blocks, externally specified boundary conditions, or both. The number of guard cells needed depends upon the interpolation scheme and differencing stencil used for the hydrodynamics algorithm; for the explicit PPM algorithm distributed with FLASH, four guard cells are needed in each direction. PARAMESH handles the filling of guard cells with information from other blocks or a user-specified external boundary routine. If a block's neighbor has the same level of refinement, PARAMESH fills its guard cells using a direct copy from the neighbor's interior cells. If the neighbor has a different level of refinement, the neighbor's interior cells are used to interpolate guard cell values for the block. If the block and its neighbor are stored in the memory of different processors, PARAMESH handles the appropriate parallel communication (blocks are not split between processors). PARAMESH supports only linear interpolation for guard cell filling at jumps at refinement, but it is easily extended to allow other interpolation schemes; for example, for FLASH we have added a quadratic interpolation scheme. Once each block's guard cells are filled, it can be updated independently of the other blocks.

PARAMESH also enforces flux conservation at jumps in refinement, as described by Berger and Colella (1989). At jumps in refinement, the fluxes of mass, momentum, energy, and nuclear abundances across boundary cell faces are averaged across the fine cells at that face and provided to the corresponding coarse face on the neighboring block. These averaged fluxes are then used to update the values of the coarse block's local flow variables.

Each processor decides when to refine or derefine its blocks by computing a user-defined error estimator for each block. Refinement involves creation of between one and 2d refined child blocks, while derefinement involves deletion of a block. As child blocks are created, they are temporarily placed at the end of the processor's block list. After the refinements and derefinements are complete, the blocks are redistributed among the processors using a work-weighted Morton space-filling curve in a manner similar to that described by Warren and Salmon (1987) for a parallel treecode. During the distribution step each block is assigned a work value (an estimate of the relative amount of time required to update the block). The Morton number of the block is then computed by interleaving the bits of its integer coordinates as described by Warren and Salmon (1987); this determines its location along the space-filling curve. Finally, the list of all blocks is partitioned among the processors using the block weights, equalizing the estimated workload of each processor. During the sorting step, only Morton numbers and not block data are communicated between processors; blocks are only moved (if necessary) after the sort. Changes in refinement are typically performed every ten timesteps.

The refinement criterion used by PARAMESH is adapted from Löhner (1987). Löhner's error estimator was originally developed for finite element applications and has the advantage that it uses an entirely local calculation. Furthermore, the estimator is dimensionless and can be applied with complete generality to any of the field variables of the simulation or any combination of them (by default, PARAMESH uses the density and pressure). Löhner's estimator is a modified second derivative, normalized by the average of the gradient over one computational cell. In one dimension on a uniform mesh it is given by

| (7) |

| (8) |

It is common to call an equation of state routine more than 109 times when calculating two- and three-dimensional hydrodynamic models of stellar phenomena. Thus, it is very desirable to have an equation of state that is as efficient as possible, yet accurately represents the relevant physics. While FLASH is easily capable of including any equation of state, here we discuss three equation of state (henceforth EOS) routines which are supplied with FLASH 1.x: the ideal-gas or gamma-law EOS, the Nadyozhin EOS, and the Helmholtz EOS. (The Nadyozhin EOS is no longer supplied with FLASH versions 1.6 and later.)

Each EOS routine takes as input the temperature T, density r, mean number of nucleons per isotope [`A], and mean charge per isotope [`Z]. Each routine then returns as output the scalar pressure (Ptot, specific thermal energy etot, and entropy Stot. In addition to these quantities, the EOS routines return partial derivatives of the pressure, specific thermal energy, and entropy with respect to the density and temperature:

| (9) |

An EOS is thermodynamically consistent if its outputs satisfy the Maxwell relations:

| ||||||||||||||||||||||||||||||||||||||||||||||||||

The gamma-law EOS provided with FLASH represents a simple ideal gas with constant adiabatic index g:

| (13) |

More complex equations of state are necessary for realistic models of X-ray bursts, Type Ia supernovae, classical novae, and other stellar phenomena, where the electrons and positrons may be relativistic and/or degenerate and where radiation contributes significantly to the thermodynamic state. The Nadyozhin and Helmholtz EOS routines address this need. The Nadyozhin EOS, summarized by Nadyozhin (1974) and explained in detail by Blinnikov et al. (1996), was found by Timmes and Arnett (1999) in a comparison of five different analytic EOS schemes to give the best tradeoff among accuracy, thermodynamic consistency, and speed. The Helmholtz EOS (Timmes & Swesty 1999) uses interpolation from a two-dimensional table of the Helmholtz free energy F(r,T), obtaining comparable accuracy, better thermodynamic consistency, and about twice the speed of the Nadyozhin EOS.

For stellar phenomena, the pressure, specific thermal energy, and entropy contain contributions from several components:

| ||||||||||||||||||

| (17) |

| ||||||||||||||||

| (20) |

| (21) |

| (22) |

The Nadyozhin EOS routine uses polynomial or rational functions to evaluate the thermodynamic quantities. The temperature-density plane is decomposed into 5 regions (see Figure 12 of Blinnikov et al. 1996), with different expansions or fitting functions applied to each region. The methods used in the 5 regions are (1) a perfect gas approximation with the first-order corrections for degeneracy, (2) expansions of the half-integer Fermi-Dirac functions, (3) Chandrasekhar's (1939) expansion for a degenerate gas, (4) relativistic asymptotics, and (5) Gaussian quadrature for the thermodynamic quantities. The perturbation expansions, asymptotic relations, and fitting functions for the 5 regions are combined, with extraordinary care given to making sure that transitions between the regions are continuous, smooth, and thermodynamically consistent. All of the partial derivatives are obtained analytically.

The electron-positron Helmholtz free energy table used by the Helmholtz EOS was generated by using the Timmes EOS routine (Timmes & Arnett 1999). The Fermi-Dirac integrals, along with their derivatives with respect to h and b, were calculated to at least 18 significant figures using the quadrature schemes of Aparicio (1998). Newton-Raphson iteration was used to solve for h to at least 15 significant figures. The thermodynamic derivatives were computed analytically. Searches through the free energy table are avoided by computing hash indices from the values of any given (T,r[`Z]/[`A]) pair. No computationally expensive divisions are required in interpolating from the table; all of them can be computed and stored the first time the EOS routine is called.

Modelling thermonuclear flashes typically requires the energy generation rate due to nuclear burning over a large range of temperatures, densities and compositions. The average energy generated or lost over a period of time is found by integrating a system of ordinary differential equations (the nuclear reaction network) for the abundances of important nuclei and the total energy release. In some contexts, such as Type II supernova models, the abundances themselves are also of interest. In either case, the coefficients that appear in the equations typically are extremely sensitive to temperature. The resulting stiffness of the system of equations requires the use of an implicit time integration scheme.

Timmes (1999) has reviewed several methods for solving stiff nuclear reaction networks, providing the basis for the reaction network solver included with FLASH. The scaling properties and behavior of three semi-implicit time integration algorithms (a traditional first-order accurate Euler method, a fourth-order accurate Kaps-Rentrop method, and a variable order Bader-Deuflhard method) and eight linear algebra packages (LAPACK, LUDCMP, LEQS, GIFT, MA28, UMFPACK, and Y12M) were investigated by running each of these 24 combinations on seven different nuclear reaction networks (hard-wired 13- and 19-isotope networks and table-lookup networks using 47, 76, 127, 200, and 489 isotopes). Timmes' analysis suggested that the best balance of accuracy, overall efficiency, memory footprint, and ease-of-use was provided by the choice of a 13- or 19-isotope reaction network, the variable order Bader-Deuflhard time integration method, and the MA28 sparse matrix package. The default FLASH distribution uses this combination of methods, which we describe in this section.

We begin by describing the equations solved by the nuclear burning module. We consider material which may be described by a density r and a single temperature T and contains a number of isotopes i, each of which has Zi protons and Ai nucleons (protons + neutrons). Let ni and ri denote the number and mass density, respectively, of the ith isotope, and let Xi denote its mass fraction, so that

| (23) |

| (24) |

| (25) |

The general continuity equation for the ith isotope is given in Lagrangian formulation by

| (26) |

| (27) |

The case Vi º 0 is important for two reasons. First, mass diffusion is often unimportant when compared to other transport process such as thermal or viscous diffusion (i.e., large Lewis numbers and/or small Prandtl numbers). Such a situation obtains, for example, in the study of laminar flame fronts propagating through the quiescent interior of a white dwarf. Second, this case permits the decoupling of the reaction network solver from the hydrodynamical solver through the use of operator splitting, greatly simplifying the algorithm. This is the method used by the default FLASH distribution. Setting Vi º 0 transforms equation (26) into

| (28) |

| (29) |

Because of the highly nonlinear temperature dependence of the nuclear reaction rates, and because the abundances themselves often range over several orders of magnitude in value, the values of the coefficients which appear in equations (28) and (29) can vary quite significantly. As a result, the nuclear reaction network equations are ``stiff.'' A system of equations is stiff when the ratio of the maximum to the minimum eigenvalue of the Jacobian matrix [(J)\tilde] º ¶f/¶y is large and imaginary. This means that at least one of the isotopic abundances changes on a much shorter timescale than another. Implicit or semi-implicit time integration methods are generally necessary to avoid following this short-timescale behavior, requiring the calculation of the Jacobian matrix.

It is instructive at this point to look at an example of how equation (28) and the associated Jacobian matrix are formed. Consider the 12C(a,g)16O reaction, which competes with the triple-a reaction during helium burning in stars. The rate R at which this reaction proceeds is critical for evolutionary models of massive stars since it determines how much of the core is carbon and how much of the core is oxygen after the initial helium fuel is exhausted. This reaction sequence contributes to the right-hand side equation (29) through the terms

| ||||||||||||||||||

| ||||||||||||

The default FLASH distribution includes two reaction networks. The 13-isotope a-chain plus heavy-ion reaction network is suitable for most multi-dimensional simulations of stellar phenomena where having a reasonably accurate energy generation rate is of primary concern. The 19-isotope reaction network has the same a-chain and heavy-ion reactions as the 13-isotope network, but it includes additional isotopes to accommodate some types of hydrogen burning (PP and CNO chains), along with some aspects of photodisintegration into 54Fe. This reaction network is described in Weaver, Zimmerman, & Woosley (1978). Both the networks supplied with FLASH are examples of ``hard-wired'' reaction networks, where each of the reaction sequences are carefully entered by hand. This approach is suitable for small networks when minimizing the CPU time required to run the reaction network is a primary concern, although it suffers the disadvantage of inflexibility.

The Jacobian matrices of nuclear reaction networks tend to be sparse, and they become more sparse as the number of isotopes increases. Implicit time integration schemes require the inverse of the Jacobian matrix, so we need to use a sparse linear algebra package to compute this inverse. To control the iteration count for a prespecified accuracy, FLASH uses a direct sparse matrix solver. Direct methods typically divide the solution of [(A)\tilde] ·x = b into a symbolic LU decomposition, numerical LU decomposition, and a backsubstitution phase. In the symbolic LU decomposition phase the pivot order of a matrix is determined, and a sequence of decomposition operations which minimize the amount of fill-in is recorded. Fill-in refers to zero matrix elements which become nonzero (e.g., a sparse matrix times a sparse matrix is generally a denser matrix). The matrix is not (usually) decomposed; only the steps to do so are stored. Since the nonzero pattern of a chosen nuclear reaction network does not change, the symbolic LU decomposition is a one-time initialization cost for reaction networks. In the numerical LU decomposition phase, a matrix with the same pivot order and nonzero pattern as a previously factorized matrix is numerically decomposed into its lower-upper form. This phase must be done only once for each staged set of linear equations. In the backsubstitution phase, a set of linear equations is solved with the factors calculated from a previous numerical decomposition. The backsubstitution phase may be performed with as many right-hand sides as needed, and not all of the right-hand sides need to be known in advance.

The MA28 linear algebra package used by FLASH is described by Duff, Erisman, & Reid (1986). It uses a combination of nested dissection and frontal envelope decomposition to minimize fill-in during the factorization stage. An approximate degree update algorithm that is much faster (asymptotically and in practice) than computing the exact degrees is employed. One continuous real parameter sets the amount of searching done to locate the pivot element. When this parameter is set to zero, no searching is done and the diagonal element is the pivot, while when set to unity, complete partial pivoting is done. Since the matrices generated by reaction networks are usually diagonally dominant, the routine is set in FLASH to use the diagonal as the pivot element. Several test cases showed that using partial pivoting did not make a significant accuracy difference, but were less efficient since a search for an appropriate pivot element had to be performed. MA28 accepts the nonzero entries of the matrix in the (i, j, ai,j) coordinate system, and typically uses uses 70-90% less storage than storing the full dense matrix.

The time integration method used by FLASH for evolving the reaction networks is the variable order Bader-Deuflhard method (e.g., Bader & Deuflhard 1983; Press et al. 1992). The reaction network is advanced over a large time step H from yn to yn+1 by the following sequence of matrix equations. First,

| ||||||||||||||

| ||||||||||||||

| |||||||||||

The energy generation rate is given by the sum

| (35) |

| (36) |

Finally, the tabulation of Caughlan & Fowler (1988) is used in FLASH for most of the key nuclear reaction rates. Modern values for some of the reaction rates were taken from the reaction rate library of Hoffman (1999, priv. comm.). Nuclear reaction rate screening effects as implemented by Wallace, Woosley, & Weaver (1982), and decreases in the energy generation rate [(e)\dot]nuc due to neutrino losses as given by Itoh et al. (1985) are included in calculations done with FLASH.

In this section we describe several of the tools distributed with FLASH. The source code for these programs (except for setup) can be found in the main FLASH source tree under the tools/ directory.

The setup script, found in the FLASH root directory, provides the primary command-line interface to the FLASH source code. It configures the source tree for a given problem and target machine and creates files needed to parse the runtime parameter file and make the FLASH executable. More description of what setup does may be found in Section 6.1. Here we describe its basic usage.

Running setup without any options prints a message describing the available options:

[sphere 5:09pm] % ./setupAvailable values for the mandatory option (the name of the problem to configure) are determined by scanning the setups/ directory.

usage: setup <problem-name> [options]

problems: see ./setups

options: -verbose -portable -defaults -[123]d -maxblocks=<#>

[-site=<site> | -ostype=<ostype>]

For compatibility with older versions of setup, the syntax

setup <problem-name> <ostype> [options]

is also accepted, so long as <ostype> does not begin with a dash (-).

In this case the -site and -ostype options cannot be used.

A ``problem'' consists of a set of initial and boundary conditions, possibly additional physics (e.g., a subgrid model for star formation), and a set of adjustable parameters. The directory associated with a problem contains source code files which implement the initial and boundary conditions and extra physics, together with a configuration file, read by setup, which contains information on required physics modules and adjustable parameters.

setup determines site-dependent configuration information by looking in source/sites/ for a directory with the same name as the output of the hostname command; failing this, it looks in source/sites/Prototypes/ for a directory with the same name as the output of the uname command. The site and operating system type can be overridden with the -site and -ostype command-line options. Only one of these options can be used. The directory for each site or operating system type contains a makefile fragment ( Makefile.h) that sets command names, compiler flags, and library paths, and any replacement or additional source files needed to compile FLASH for that machine type.

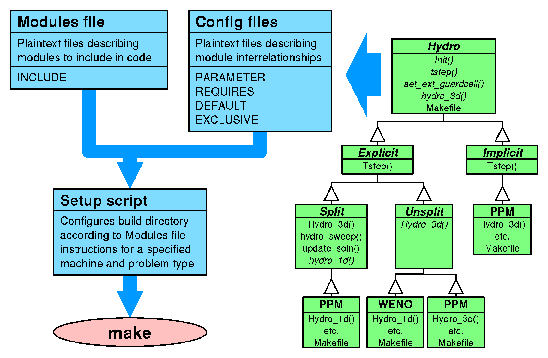

setup uses the problem and site/OS type, together with a user-supplied file called Modules which lists the code modules to include, to generate a directory called object/ which contains links to the appropriate source files and makefile fragments. It also creates the master makefile (object/Makefile) and several Fortran include files that are needed by the code in order to parse the runtime parameter file. After running setup, the user can make the FLASH executable by running gmake in the object/ directory (or from the FLASH root directory, if the -portable option is not used with setup). The optional command-line modifiers have the following interpretations:

| -verbose | Normally setup echoes to the standard output summary messages indicating what it is doing. Including the -verbose option causes it to also list the links it creates. |

| -portable | This option creates a portable build directory. Instead of object/, setup creates object_problem/, and it copies instead of linking to the source files in source/ and setups/. The resulting build directory can be placed into a tar archive and sent to another machine for building (use the Makefile created by setup in the tar file). |

| -defaults | Normally setup requires that the user supply a plain text file called Modules (in the FLASH root directory) which specifies which code modules to include. A sample Modules file appears in Figure 4. Each line is either a comment (preceded by a hash mark (#)) or a module include statement of the form INCLUDE module. Sub-modules are indicated by specifying the path to the sub-module in question; in the example, the sub-module gamma of the eos module is included. If a module has a default sub-module, but no sub-module is specified, setup automatically selects the default using the module's configuration file. |

| The -defaults option enables setup to generate a ``rough draft'' of a Modules file for the user. The configuration file for each problem setup specifies a number of code module requirements; for example, a problem may require the perfect-gas equation of state (materials/eos/gamma) and an unspecified hydro solver (hydro). With -defaults, setup creates a Modules file by converting these requirements into module include statements. In addition, it checks the configuration files for the required modules and includes any of their required modules, eliminating duplicates. Most users configuring a problem for the first time will want to run setup with -defaults to generate a Modules file, then edit Modules directly to specify different sub-modules. After editing Modules in this way, re-run setup without -defaults to incorporate the changes into the code configuration. | |

| -[123]d | By default setup creates a makefile which produces a FLASH executable capable of solving two-dimensional problems (equivalent to -2d). To generate a makefile with options appropriate to three-dimensional problems, use -3d. To generate a one-dimensional code, use -1d. These options are mutually exclusive and cause setup to add the appropriate compilation option to the makefile it generates. |

| -maxblocks=# | This option is also used by setup in constructing the makefile compiler options. It determines the amount of memory allocated at runtime to the adaptive mesh refinement (AMR) block data structure. For example, to allocate enough memory on each processor for 500 blocks, use -maxblocks=500. If the default block buffer size is too large for your system, you may wish to try a smaller number here (the defaults are currently defined in source/driver/physicaldata.fh). Alternatively, you may wish to experiment with larger buffer sizes if your system has enough memory. |

# Modules file constructed for rt problem by setup -defaults |

After configuring the source tree with setup and using gmake to create an executable in the build directory, look in the build directory for a file named rt_parms.txt. This contains a complete list of all of the runtime parameters available to the executable, together with their variable types, default values, and comments. To set runtime parameters to values other than the defaults, create a runtime parameter file named flash.par in the directory from which FLASH is to be run. The format of this file is described in Section 2.

The FLASH output comparison utility, or focu (pronounced faux-coup), is a command-line utility which compares FLASH output files according to specified criteria (e.g., local or global error criterion on any of the hydrodynamical variables) and reports whether they are the same or not. This is useful in determining whether a change to FLASH (or to the value of a runtime parameter) has changed the results obtained with a given problem.

The focu executable is configured and built in much the same way as is FLASH: obtain source tree, run setup to configure the source tree for a given architecture, and run gmake in the build directory. FLASH and focu are different in that focu is problem-independent, so only a machine type needs to be specified, and in that (at least at the present time) all of the modules included with focu are used, so setup is always run with the -defaults option. (See Section 4.1 for details regarding the usage of setup.) To summarize the steps in building focu:

Running focu is very similar to running FLASH itself. First prepare a runtime parameter file, then run focu using

mpirun -np N focu parameter-file(or use the command used by your system to start MPI programs). Here N is the number of processors to use (which need not be the same as the number of processors used by the FLASH job that produced the output files to be read). If the parameter-file argument is not supplied, focu looks for a parameter file named focu.par in the current directory. The format of this file is identical to that used by FLASH (see Section 2).

The runtime parameters understood by focu are as follows. Default values are listed in brackets following each description.

| file1 | Name of the first file to compare. [``file1''] |

| file2 | Name of the second file to compare. [``file2''] |

| variable | The name of the hydrodynamical variable to examine. Any variable included in the output file can be examined; for these variables the first four characters of the name are significant. Typical variables are ``dens,'' ``pres,'' ``velx,'' ``vely,'' ``velz,'' ``temp,'' and ``ener.'' Computed variables are also allowed: ``kinetic energy,'' ``potential energy,'' ``internal energy,'' ``x momentum,'' ``y momentum,'' and ``z momentum.'' [``density''] |

| criterion | The error criterion to apply in comparing the files. This must be of the form ``A B'', where A is either ``local'' or ``global,'' and B is either ``sum,'' ``min,'' or ``max.'' For the global criteria, focu first computes the volume-weighted average, minimum value, or maximum value of variable for each file, then computes the L1 deviation between the two global quantities. For the local criteria, the files are compared on a zone-by-zone basis. Consequently the AMR block structures of the two files must be the same in order for the comparison to succeed. If criterion is ``local sum,'' the volume-weighted average of the local L1 deviations is compared to threshold to determine success or failure. If criterion is ``local min'' or ``local max,'' the minimum or maximum L1 deviation is compared. The L1 deviation for two quantities X1 and X2 is defined as |X2-X1| / max{1/2|X1+X2|,10-99}. [``global sum''] |

| threshold | The error threshold to use in deciding success or failure. [10-6] |

| outfile | The name of the output file to create when generating the comparison report. Setting this to ``stdout'' causes focu to write to standard output. [``stdout''] |

FOCU: Flash Output Comparison Utility |

The output of focu is a short report listing the volume-averaged sum, minimum, and maximum deviations (local or global, depending on the setting of criterion) and reporting SUCCESS or FAILURE according to the supplied threshold. An example report generated by focu is shown in Figure 5. Note that the last line of the report begins with SUCCESS or FAILURE, making it easy for a script to test the results. For example, with csh one might use

set result = `mpirun -np 2 focu focu_sedov.par | grep SUCCESS`

if ("result" == "SUCCESS") then

...

endif

focu is used by the automated FLASH test script (Section 5.1) to compare FLASH outputs to the reference results supplied as part of the test suite.

fidlr is a set of routines, written in the IDL language, that can plot or read data files produced by FLASH. The routines include programs which can be run from the IDL command line to read 1D, 2D, or 3D FLASH datasets and interpolate them onto uniform grids. Other IDL programs (xflash and cousins) provide a graphical interface to these routines, enabling users to read and plot AMR datasets without interpolating onto a uniform mesh.

Currently, the fidlr routines support 1, 2, and 3-dimensional datasets in the FLASH HDF 4 or HDF 5 formats. Both plotfiles and checkpoint files are supported, as they differ only in the number of variables stored, and possibly the numerical precision. Since IDL does not directly support HDF 5, the call_external function is used to interface with a set of C routines that read in the HDF 5 data. Using these routines requires that the HDF 5 library be installed on your system and that the shared-object library be compiled before reading in data. Since the call_external function is used, the demo version of IDL will not run the routines.

For basic plotting operations, the easiest way to generate plots of FLASH data is to use one of the widget interfaces: xflash for 2d data, xflash1d for 1d data, and xflash3d for 3d data. For more advanced analysis, the read routines can be used to read the data into IDL, and it can then be accessed through the common blocks. Examples of using the fidlr routines from the command line are provided in Section 4.3.5. Additionally, some scripts demonstrating how to analyze FLASH data using the fidlr routines are described in Section 4.3.5 (see for example radial.pro).

The driver routines provided with fidlr visualize the AMR data by first converting it to a uniform mesh. This allows for ease of plotting and manipulation, but it is less efficient than plotting the native AMR structure. Analysis can still be performed directly on the native data through the command line.

fidlr is distributed with FLASH and contained in the tools/fidlr/ directory. In addition to the IDL procedures, a README file is present which will contain up-to-date information on changes and installation requirements.

These routines were written and tested using IDL v5.3 for IRIX. They should work without difficulty on any UNIX machine with IDL installed. Any functionality of fidlr under Windows is purely coincidental. Later versions of IDL are rumored to no longer support GIF files due to copyright difficulties, so outputting to a image file may not work. It is possible to run IDL in a `demo' mode if there are not enough licenses available. Unfortunately, some parts of fidlr will not function in this mode, as certain features of IDL are disabled. This guide assumes that you are running the full version of IDL.

Installation of fidlr requires defining some environment variables, and compiling the support for HDF 5 files. These procedures are described below.

Setting up fidlr environment variables

The FLASH IDL routines are located in the tools/fidlr/ subdirectory

of the FLASH root directory. To use them you must set two environment

variables. First set the value of XFLASH_DIR to the location of

the FLASH IDL routines; for example, under csh, use

setenv XFLASH_DIR flash-root-path/tools/fidlrwhere flash-root-path is the absolute path of the FLASH root directory. This variable is used in the plotting routines to find the customized color table for xflash, as well as to identify the location of the shared-object libraries compiled for HDF 5 support.

Next add this directory to your IDL_PATH variable:

setenv IDL_PATH "${XFLASH_DIR}:$IDL_PATH"If you have not previously defined IDL_PATH, omit :$IDL_PATH from the above command. You may wish to include these commands in your .cshrc file (or profile if you use another shell) to avoid having to reissue them every time you log in.

Setting up the HDF 5 routines

For fidlr to read HDF 5 files, you need to install the HDF 5 library

on your machine and compile the wrapper routines. The HDF 5 libraries

can be obtained in either source or binary form for most Unix

platforms from http://hdf.ncsa.uiuc.edu. The Makefile supplied

will create the shared-object library on an SGI, but will need to be

edited for other platforms. The compiler flags for a shared-object

library for different versions of Unix can be found in

$IDL_DIR/external/call_external/C/callext_unix.txtwhere $IDL_DIR points to the location of IDL. The Makefile should be modified according to the instructions in that file. The Makefile needs to know where the HDF 5 library is installed as well. This is set through the HDF5path definition in the Makefile.

It is important that you compile the shared-object to conform to the same application binary interface (ABI) that IDL was compiled with. On an SGI, IDL version 5.2.1 and later use the n32 ABI, while versions before this are o32. The HDF library will also need to be compiled in the same format.

IDL interacts with external programs through the call_external function. Any arguments are passed by reference through argc and argv. These are recast into pointers of the proper type in the C routines. The C wrappers call the appropriate HDF functions to read the data and return it through the argc pointers.

Running IDL

fidlr uses 8-bit color tables for all of its plotting. On displays

with higher colordepths, it may be necessary to use color overlays

to get the proper colors on your display. For SGI machines, launching

IDL with the start.pro script with enable 8-bit pseudocolor overlays.

The fidlr routines access the data contained in the FLASH output files through a series of common blocks. For the most part, the variable names are consistent with the names used in FLASH. It is assumed that the user is familiar with the block structured AMR format employed by FLASH. All of the unknowns are stored together in the unk data-structure, which mirrors that contained in FLASH itself. The list of variable names is contained in the string array unk_names. A utility function var_index is provided to convert between a 4-character string name and the index into unk. For example,

unk[var_index('dens'),*,*,*,*]selects the density from the unk array. Recall that IDL uses zero-based indexing for arrays.

fidlr will attempt to create derived variables from the variables stored in the output file if it can. For example, the total velocity can be created from the components of the velocity, if they are all present. A separate data structure varnames contains the list of variables in the output file plus any additional variables fidlr knows how to derive.

At present, the global common blocks defined by fidlr are:

common size, tot_blocks, nvar, nxb, nyb, nzb, ntopx, ntopy, ntopzThese can be defined on the command line by executing the def_common script provided in the fidlr directory. This allows for analysis of the FLASH data through the command line with any of IDLs built in functions. For example, to find the minimum density in the leaf blocks of the file filename_hdf_chk_0000, you could execute:

common time, time, dt

common tree, lrefine, nodetype, gid, coord, size, bnd_box

common vars, unk, unk_names

IDL> .run def_commonHere, in addition to the unk data-structure, we used the nodetype array, which carries the same meaning as it does in FLASH.

IDL> read_amr, 'filename_hdf_chk_0000'

IDL> leaf_blocks = where(nodetype EQ 1)

IDL> print, min(unk[var_index('dens'),*,*,*,leaf_blocks])

The main interface to the fidlr routines for plotting 2-dimensional data sets is xflash. xflash produces colormap plots of FLASH data with optional velocity vectors, contours, and the AMR block structure overlaid. The basic operation of xflash is to specify a file or range of files, probe the file for the list of variables it contains (through the discover variables button described below), and then specify the remaining plot options.

xflash can output to the screen, postscript, or an image file (GIF). If the data is significantly higher resolution than the output device, then xflash (through xplot_amr.pro) will sub-sample the image by one or more levels of refinement before plotting.

Once the image is plotted, the query button will become active. Pressing query and then clicking anywhere in the domain will pop up a window containing the values of all the FLASH variables in the zone nearest the cursor.

Typing xflash at the IDL command prompt will launch the main xflash widget, shown in Figure 6(a). The widget is broken into several sections, with some features initially disabled. These sections are explained below.

File Options

The file to be visualized is composed of the path, the basename (the same base name used in the flash.par) plus any file type information appended to it (ex. 'hdf_chk_') and the range of suffices to loop through. An optional parameter, step, controls how many files to skip when looping over the files. By default, xflash sets the path to the working directory that IDL was started from. xflash will spawn gzip if the file is stored on disk compressed and the file is gzipped box is checked. After the file is read, it will be compressed again.

Once the file options are set, clicking on discover variables will read the variable list from the first file specified, and use the variable names to populate the variable selection box. xflash will automatically determine if the file is an HDF 4 or 5 file, and read the `unknown names' record to get the variable list. This will work for both plotfiles and checkpoint files generated by FLASH.

Output Options

A plot can be output to the screen (default), a Postscript file, or a GIF file. The output filenames are composed from the basename + variable name + suffix. For outputs to the screen or GIF, the plot size options allow you to specify the image size in pixels. For Postscript output, xflash chooses portrait or landscape orientation depending on the aspect ratio of the domain.

Problem

The problem dropbox allows you to select one of the predefined problem defaults. This will load the options (data ranges, velocity parameters, and contour options) for the problem as specified in the xflash_defaults procedure. When xflash is started, xflash_defaults is executed to read in the known problem names. To add a problem to xflash, only the xflash_defaults procedure needs to be modified. The details of this procedure are provided in the comment header in xflash_defaults. It is not necessary to add a problem in order to plot a dataset, as all default values can be overridden through the widget.

Variables

The variables dropbox lists the variables stored in the `unknown names' record in the data file, and any derived variables that xflash knows how to construct from these variables (ex: sound speed). This allows you to choose the variable to be plotted. By default, xflash reads all the variables in a file, so switching the variable to plot can be done without rereading.

Colormap

The colormap dropbox lists the available colormaps to xflash. These colormaps are stored in flash_colors.tbl in the fidlr directory, and differ from the standard IDL colormaps. The first 12 colors in the colormaps are reserved by xflash to hold the primary colors used for different aspects of the plotting. Additional colormaps can be created by using the xpalette function in IDL.

Options

The options block allows you to toggle various options on/off (Table 3).

Data Range

These fields allow you to specify the range of the variable to plot. Data outside of the range will be scaled to the minimum and maximum values of the colormap respectively.

Contour Options

This launches a dialog box that allows you to select up to 4 contour lines to overplot of the data (see figure 7). The variable, value, and color are specified for each reference contour. To plot a contour, select the check box next to the contour number. This will allow you to set the variable to make the contour from, the value of the contour, and the color.

|

|

Velocity Options

This launches a dialog box that allows you to set the velocity options used to plot velocity vectors on the plot (see figure 8). The plotting of velocity vectors is controlled by the partvelvec.pro procedure. xskip and yskip allow you to thin out the arrows. typical velocity sets the velocity to scale the vectors to, and minimum velocity and maximum velocity specify the range of velocities to plot vectors for.

|

|

Zoom

The zoom options allow you to set the domain limits for the plot. A value of -1 uses the actual limit of the domain.

Plot

Create the plot. The status of the plot will appear on the status bar at the bottom.

Query

The query button becomes active once the plot is created. Clicking on

query and then clicking somewhere in the domain lists the

data values at the cursor location (see Figure 9).

|

|

xflash3d provides an interface for plotting slices of 3-dimension FLASH datasets. It is written to conserve memory-only a single variable is read in at a time, and only the slice to be plotted is put on a uniform grid. The merging of the AMR data to a uniform grid is accomplished by merge3d_amr.pro which can take a variety of arguments which control what volume of the domain is to be put onto a uniform grid.

|

|

Figure 10 shows the main xflash3d widget. The interface is similar to that of xflash, so the above description from that section still applies. The major difference is in the zoom section, which controls the slice plane.

The slice plane button group controls the plane to plot the data in. For a given plane, the direction orthogonal will have only on active text box to enter zoom information-this controls the position of the slice plane in the normal direction. For the directions in the slice plane, the zoom boxes allow you to select a sub-domain in that plane to plot.

Table 4 lists all of the fidlr routines, grouped by function. Most of these routines rely on the common blocks to get the tree structure necessary to interpret a FLASH dataset. Command line analysis of FLASH data requires that the common blocks be defined, usually by executing def_common.pro.

Most of the fidlr routines can be used directly from the command line

to perform analysis not offered by the different widget interfaces. This

section provides an example of using the fidlr routines.

Example. Generate a contour plot of density from a 3-d dataset in the

x-z plane at the y midpoint.

IDL> .run def_common

IDL> read_amr, 'filename_hdf_chk_0000'

IDL> sdens = merge3d_amr(unk[var_index('dens'),*,*,*,*], $

IDL> xmerge=x, ymerge=y, zmerge=z)

IDL> help, sdens

sdens FLOATArray[256, 256, 256]

IDL> contour, reform(sdens[*,128,*]), x, z

To verify that FLASH works as expected, and to debug changes in the code, we have created a suite of standard test problems. Most of these problems have analytical solutions which can be used to test the accuracy of the code. The remaining problems do not have analytical solutions, but they produce well-defined flow features which have been verified by experiments and are stringent tests of the code. The test suite configuration code is included with the FLASH source tree (in the setups/ directory), so it is easy to configure and run FLASH with any of these problems `out of the box.' Sample runtime parameter files are also included. Files containing reference results for the test suite tend to be large and so are distributed separately (via the FLASH_TEST CVS tree or in archive form as FLASH_TEST.tar). The FLASH_TEST tree also contains the runtime parameter files needed to reproduce the reference results.

Included with the test suite results is a script named flash_test which automates the process of comparing test suite results with the reference results. flash_test takes one argument, the machine type (run flash_test with no arguments for a list of available types). It automatically configures and builds FLASH for each of the test problems, builds the focu output comparison utility (Section 4.2), runs FLASH with each test problem, and uses focu to compare the results. It prepares a report for the entire suite of problems, flagging compilation problems, catastrophic runtime errors, and erroneous results. Since flash_test can be quite time-consuming to execute for the full test suite, it is generally a good idea to execute it as a batch job on the target system. Note that the tests may be run individually using the runtime parameter files provided with the FLASH test suite. Plots similar to those in this section can be produced using IDL routines provided with the test suite; these make use of the FLASH IDL tools (Section 4.3).

The Sod problem (Sod 1978) is an essentially one-dimensional flow discontinuity problem which provides a good test of a compressible code's ability to capture shocks and contact discontinuities with a small number of zones and to produce the correct density profile in a rarefaction. It also tests a code's ability to correctly satisfy the Rankine-Hugoniot shock jump conditions. When implemented at an angle to a multidimensional grid, it can also be used to detect irregularities in planar discontinuities produced by grid geometry or operator splitting effects.

We construct the initial conditions for the Sod problem by establishing a planar interface at some angle to the x and y axes. The fluid is initially at rest on either side of the interface, and the density and pressure jumps are chosen so that all three types of flow discontinuity (shock, contact, and rarefaction) develop. To the ``left'' and ``right'' of the interface we have

| (37) |

In FLASH, the Sod problem (sod) uses the runtime parameters listed in Table 5 in addition to the regular ones supplied with the code. For this problem we use the gamma equation of state module and set the value of the parameter gamma supplied by this module to 1.4. The default values listed in Table 5 are appropriate to a shock with normal parallel to the x-axis which initially intersects that axis at x=0.5 (halfway across a box with unit dimensions).

| Variable | Type | Default | Description |

| rho_left | real | 1 | Initial density to the left of the interface (rL) |

| rho_right | real | 0.125 | Initial density to the right (rR) |

| p_left | real | 1 | Initial pressure to the left (pL) |

| p_right | real | 0.1 | Initial pressure to the right (pR) |

| u_left | real | 0 | Initial velocity (perpendicular to interface) to the left (uL) |

| u_right | real | 0 | Initial velocity (perpendicular to interface) to the right (uR) |

| xangle | real | 0 | Angle made by interface normal with the x-axis (degrees) |

| yangle | real | 90 | Angle made by interface normal with the y-axis (degrees) |

| posn | real | 0.5 | Point of intersection between the interface plane and the x-axis |

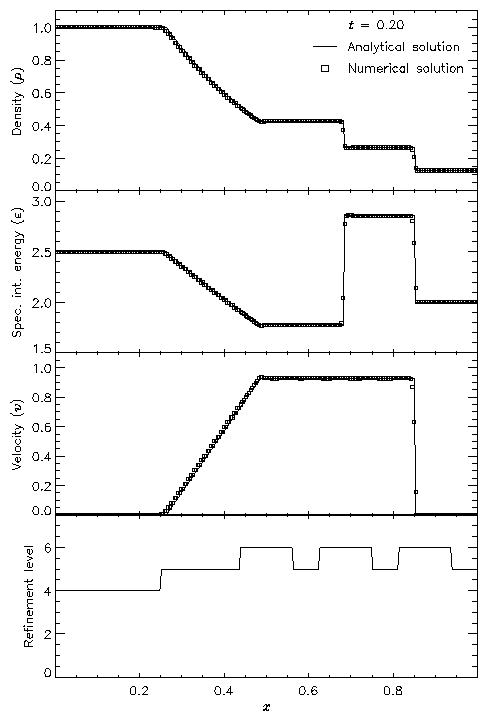

Figure 11 shows the result of running the Sod problem with FLASH on a two-dimensional grid, with the analytical solution shown for comparison. The hydrodynamical algorithm used here is the directionally split piecewise-parabolic method (PPM) included with FLASH. In this run the shock normal is chosen to be parallel to the x-axis. With six levels of refinement, the effective grid size at the finest level is 2562, so the finest zones have width 0.00390625. At t=0.2 three different nonlinear waves are present: a rarefaction between x » 0.25 and x » 0.5, a contact discontinuity at x » 0.68, and a shock at x » 0.85. The two sharp discontinuities are each resolved with approximately three zones at the highest level of refinement, demonstrating the ability of PPM to handle sharp flow features well. Near the contact discontinuity and in the rarefaction we find small errors of about 1-2% in the density and specific internal energy, with similar errors in the velocity inside the rarefaction. Elsewhere the numerical solution is exact; no oscillation is present.

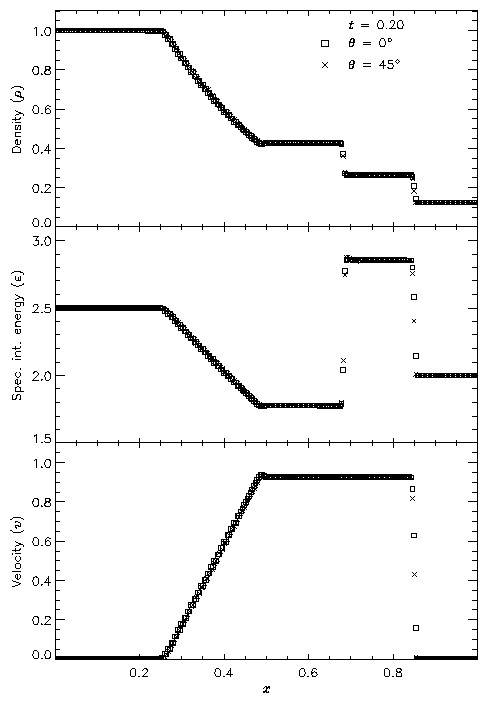

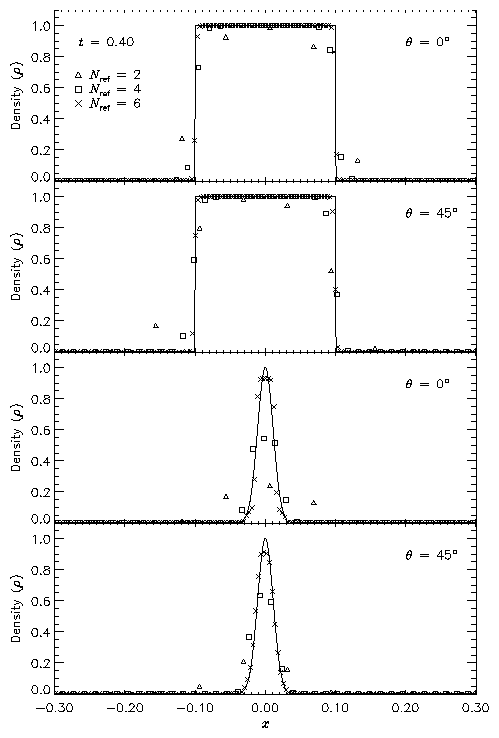

Figure 12 shows the result of running the Sod problem on the same two-dimensional grid with different shock normals: parallel to the x-axis (q = 0°) and along the box diagonal (q = 45°). For the diagonal solution we have interpolated values of density, specific internal energy, and velocity to a set of 256 points spaced exactly as in the x-axis solution. This comparison shows the effects of the second-order directional splitting used with FLASH on the resolution of shocks. At the right side of the rarefaction and at the contact discontinuity the diagonal solution undergoes slightly larger oscillations (on the order of a few percent) than the x-axis solution. Also, the value of each variable inside the discontinuity regions differs between the two solutions by up to 10% in most cases. However, the location and thickness of the discontinuities is the same between the two solutions. In general shocks at an angle to the grid are resolved with approximately the same number of zones as shocks parallel to a coordinate axis.

Figure 13 presents a grayscale map of the density at t=0.2 in the diagonal solution together with the block structure of the AMR grid in this case. Note that regions surrounding the discontinuities are maximally refined, while behind the shock and discontinuity the grid has de-refined as the second derivative of the density has decreased in magnitude. Because zero-gradient outflow boundaries were used for this test, some reflections are present at the upper left and lower right corners, but at t=0.2 these have not yet propagated to the center of the grid.

This problem was originally used by Woodward and Colella (1984) to compare the performance of several different hydrodynamical methods on problems involving strong, thin shock structures. It has no analytical solution, but since it is one-dimensional, it is easy to produce a converged solution by running the code with a very large number of zones, permitting an estimate of the self-convergence rate when narrow, interacting discontinuities are present. For FLASH it also provides a good test of the adaptive mesh refinement scheme.

The initial conditions consist of two parallel, planar flow discontinuities. Reflecting boundary conditions are used. The density in the left, middle, and right portions of the grid (rL, rM, and rR, respectively) is unity; everywhere the velocity is zero. The pressure is large to the left and right and small in the center:

| (38) |

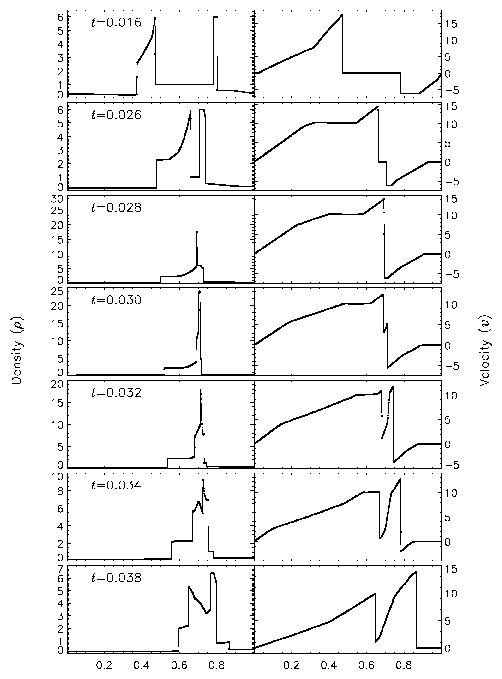

Figure 14 shows the density and velocity profiles at several different times in the converged solution, demonstrating the complexity inherent in this problem. The initial pressure discontinuities drive shocks into the middle part of the grid; behind them, rarefactions form and propagate toward the outer boundaries, where they are reflected back onto the grid and interact with themselves. By the time the shocks collide at t=0.028, the reflected rarefactions have caught up to them, weakening them and making their post-shock structure more complex. Because the right-hand shock is initially weaker, the rarefaction on that side reflects from the wall later, so the resulting shock structures going into the collision from the left and right are quite different. Behind each shock is a contact discontinuity left over from the initial conditions (at x » 0.50 and 0.73). The shock collision produces an extremely high and narrow density peak; in the Woodward and Colella calculation the peak density is slightly less than 30. Even with ten levels of refinement, FLASH obtains a value of only 18 for this peak. Reflected shocks travel back into the colliding material, leaving a complex series of contact discontinuities and rarefactions between them. A new contact discontinuity has formed at the point of the collision (x » 0.69). By t=0.032 the right-hand reflected shock has met the original right-hand contact discontinuity, producing a strong rarefaction which meets the central contact discontinuity at t=0.034. Between t=0.034 and t=0.038 the slope of the density behind the left-hand shock changes as the shock moves into a region of constant entropy near the left-hand contact discontinuity.

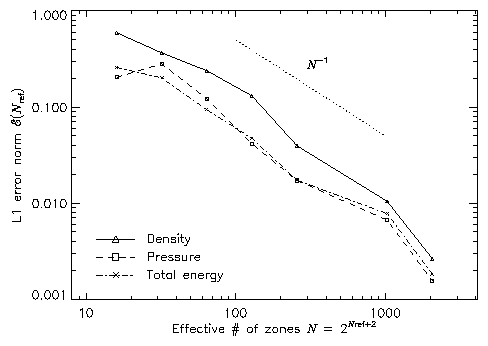

Figure 15 shows the self-convergence of density and pressure when FLASH is run on this problem. For several runs with different maximum refinement levels, we compare the density, pressure, and total specific energy at t=0.038 to the solution obtained using FLASH with ten levels of refinement. This figure plots the L1 error norm for each variable u, defined using

| (39) |

Although PPM is formally a second-order method, one sees from this plot that, for the interacting blast-wave problem, the convergence rate is only linear. Indeed, in their comparison of the performance of seven nominally second-order hydrodynamic methods on this problem, Woodward and Colella found that only PPM achieved even linear convergence; the other methods were worse. The error norm is very sensitive to the correct position and shape of the strong, narrow shocks generated in this problem.

The additional runtime parameters supplied with the 2blast problem are listed in Table 6. This problem is configured to use the perfect-gas equation of state (gamma), and it is run in a two-dimensional unit box with gamma set to 1.4. Boundary conditions in the y direction (transverse to the shock normals) are taken to be periodic.

| Variable | Type | Default | Description |

| rho_left | real | 1 | Initial density to the left of the left interface (rL) |

| rho_mid | real | 1 | Initial density between the interfaces (rM) |

| rho_right | real | 1 | Initial density to the right of the right interface (rR) |

| p_left | real | 1000 | Initial pressure to the left (pL) |

| p_mid | real | 0.01 | Initial pressure in the middle (pM) |

| p_right | real | 100 | Initial pressure to the right (pR) |

| u_left | real | 0 | Initial velocity (perpendicular to interface) to the left (uL) |

| u_mid | real | 0 | Initial velocity (perpendicular to interface) in the middle (uL) |

| u_right | real | 0 | Initial velocity (perpendicular to interface) to the right (uR) |

| xangle | real | 0 | Angle made by interface normal with the x-axis (degrees) |

| yangle | real | 90 | Angle made by interface normal with the y-axis (degrees) |

| posnL | real | 0.1 | Point of intersection between the left interface plane and the x-axis |

| posnR | real | 0.9 | Point of intersection between the right interface plane and the x-axis |

The Sedov explosion problem (Sedov 1959) is another purely hydrodynamical test in which we check the code's ability to deal with strong shocks and non-planar symmetry. The problem involves the self-similar evolution of a cylindrical or spherical blast wave from a delta-function initial pressure perturbation in an otherwise homogeneous medium. To initialize the code, we deposit a quantity of energy E=1 into a small region of radius dr at the center of the grid. The pressure inside this volume, p0¢, is given by

| (40) |

| (41) |

| ||||||||||||||||||||||

| ||||||||||||||||

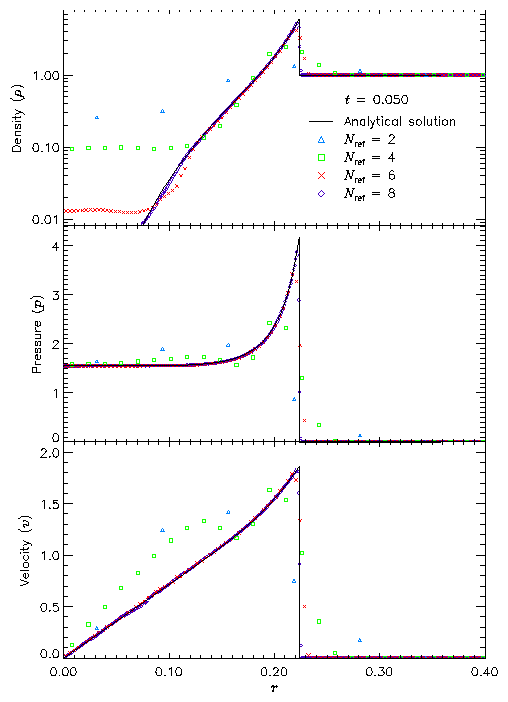

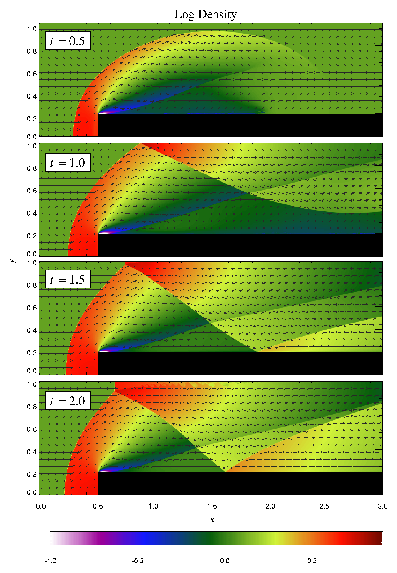

Figure 16 shows density, pressure, and velocity profiles in the two-dimensional Sedov problem at t=0.05. Solutions obtained with FLASH on grids with 2, 4, 6, and 8 levels of refinement are shown in comparison with the analytical solution. In this figure we have computed average radial profiles in the following way. We interpolated solution values from the adaptively gridded mesh used by FLASH onto a uniform mesh having the same resolution as the finest AMR blocks in each run. Then, using radial bins with the same width as the zones in the uniform mesh, we binned the interpolated solution values, computing the average value in each bin. At low resolutions, errors show up as density and velocity overestimates behind the shock, underestimates of each variable within the shock, and a numerical precursor spanning 1-2 zones in front of the shock. However, the central pressure is accurately determined, even for two levels of refinement; because the density goes to a finite value rather than its correct limit of zero, this corresponds to a finite truncation of the temperature (which should go to infinity as r® 0). As resolution improves, the artificial finite density limit decreases; by Nref=6 it is less than 0.2% of the peak density. Except for the Nref=2 case, which does not show a well-defined peak in any variable, the shock itself is always captured with about two zones. The region behind the shock containing 90% of the swept-up material is represented by four zones in the Nref=4 case, 17 zones in the Nref=6 case, and 69 zones for Nref=8. However, because the solution is self-similar, for any given maximum refinement level the shock will be four zones wide at a sufficiently early time. The behavior when the shock is underresolved is to underestimate the peak value of each variable, particularly the density and pressure.

Figure 17 shows the pressure field in the 8-level calculation at t=0.05 together with the block refinement pattern. Note that a relatively small fraction of the grid is maximally refined in this problem. Although the pressure gradient at the center of the grid is small, this region is refined because of the large temperature gradient there. This illustrates the ability of PARAMESH to refine grids using several different variables at once.

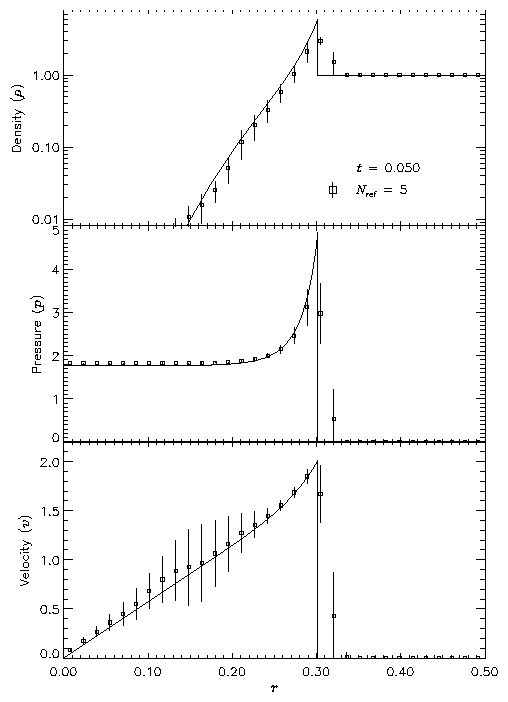

We have also run FLASH on the spherically symmetric Sedov problem in order to verify the code's performance in three dimensions. The results at t=0.05 using five levels of grid refinement are shown in Figure 18. In this figure we have plotted the root-mean-square (RMS) numerical solution values in addition to the average values. As in the two-dimensional runs, the shock is spread over about two zones at the finest AMR resolution in this run. The width of the pressure peak in the analytical solution is about 1 1/2 zones at this time, so the maximum pressure is not captured in the numerical solution. Behind the shock the numerical solution average tracks the analytical solution quite well, although the Cartesian grid geometry produces RMS deviations of up to 40% in the density and velocity in the derefined region well behind the shock. This behavior is similar to that exhibited in the two-dimensional problem at comparable resolution.

| Variable | Type | Default | Description |

| p_ambient | real | 10-5 | Initial ambient pressure (p0) |

| rho_ambient | real | 1 | Initial ambient density (r0) |

| exp_energy | real | 1 | Explosion energy (E) |

| r_init | real | 0.05 | Radius of initial pressure perturbation (dr) |

| xctr | real | 0.5 | x-coordinate of explosion center |

| yctr | real | 0.5 | y-coordinate of explosion center |

| zctr | real | 0.5 | z-coordinate of explosion center |

The additional runtime parameters supplied with the sedov problem are listed in Table 7. This problem is configured to use the perfect-gas equation of state (gamma), and it is run in a unit box with gamma set to 1.4.

In this problem we create a planar density pulse in a region of uniform pressure p0 and velocity u0, with the velocity normal to the pulse plane. The density pulse is defined via

| (44) |

| (45) |

| (46) |

The advection problem tests the ability of the code to handle planar geometry, as does the Sod problem. It is also like the Sod problem in that it tests the code's treatment of flow discontinuities which move at one of the characteristic speeds of the hydrodynamical equations. (This is difficult because noise generated at a sharp interface, such as the contact discontinuity in the Sod problem, tends to move with the interface, accumulating there as the calculation advances.) However, unlike the Sod problem it compares the code's treatment of leading and trailing contact discontinuities (for the square pulse), and it tests the treatment of narrow flow features (for both the square and Gaussian pulse shapes). Many hydrodynamical methods have a tendency to clip narrow features or to distort pulse shapes by introducing artificial dispersion and dissipation (Zalesak 1987).

| Variable | Type | Default | Description |

| rhoin | real | 1 | Characteristic density inside the advected pulse (r1) |

| rhoout | real | 10-5 | Ambient density (r0) |

| pressure | real | 1 | Ambient pressure (p0) |

| velocity | real | 10 | Ambient velocity (u0) |

| width | real | 0.1 | Characteristic width of advected pulse (w) |

| pulse_fctn | integer | 1 | Pulse shape function to use: 1=square wave, 2=Gaussian |

| xangle | real | 0 | Angle made by pulse plane with x-axis (degrees) |

| yangle | real | 90 | Angle made by pulse plane with y-axis (degrees) |

| posn | real | 0.25 | Point of intersection between pulse midplane and x-axis |

The additional runtime parameters supplied with the advect problem are listed in Table 8. This problem is configured to use the perfect-gas equation of state (gamma), and it is run in a unit box with gamma set to 1.4. (The value of g does not affect the analytical solution, but it does affect the timestep.)

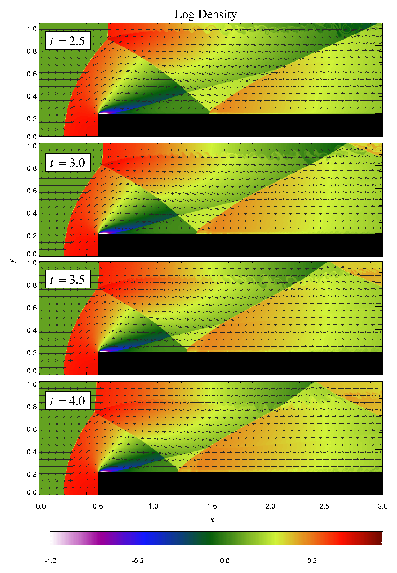

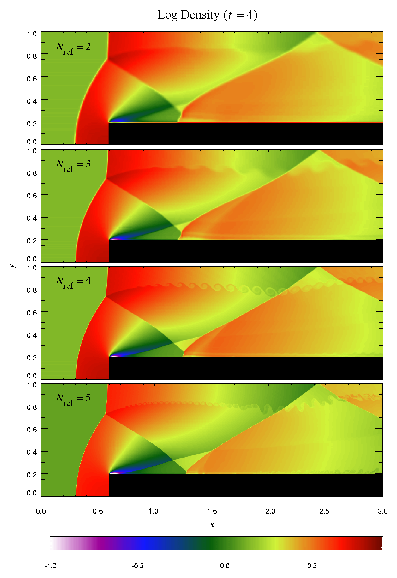

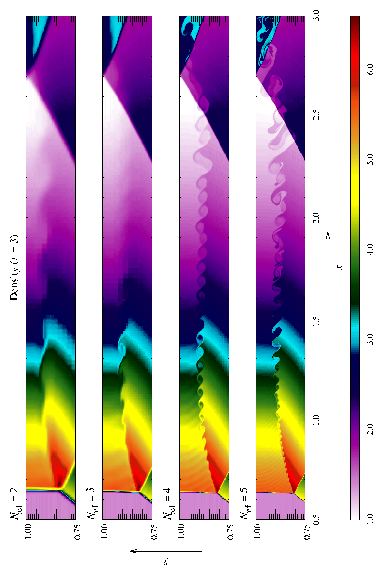

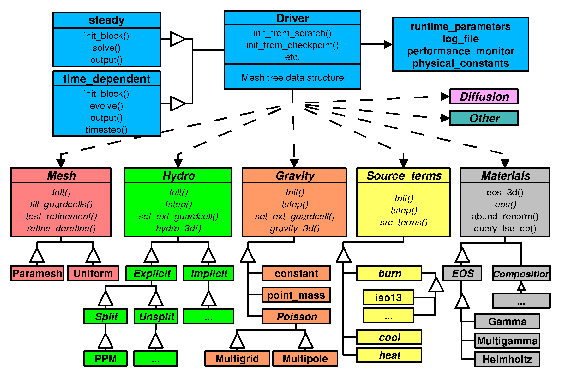

To demonstrate the performance of FLASH on the advection problem, we have performed tests of both the square and Gaussian pulse profiles with the pulse normal parallel to the x-axis (q = 0°) and at an angle to the x-axis (q = 45°) in two dimensions. The square pulse used r1=1, r0=10-3, p0=10-6, u0=1, and w=0.1. With six levels of refinement in the domain [0,1]×[0,1], this value of w corresponds to having about 52 zones across the pulse width. The Gaussian pulse tests used the same values of r1, r0, p0, and u0, but with w=0.015625. This value of w corresponds to about 8 zones across the pulse width at six levels of refinement. For each test we performed runs at two, four, and six levels of refinement to examine the quality of the numerical solution as the resolution of the advected pulse improves. The runs with q = 0° used zero-gradient (outflow) boundary conditions, while the runs performed at an angle to the x-axis used periodic boundaries.